Although network applications are diverse and have many interacting components, software is almost always at their core. Recall from Section 1.2 that a network application's software is distributed among two or more end systems (that is, host computers). For example, with the Web there are two pieces of software that communicate with each other: the browser software in the user's host (PC, Mac, or workstation), and the Web server software in the Web server. With Telnet, there are again two pieces of software in two hosts: software in the local host and software in the remote host. With multiparty video conferencing, there is a software piece in each host that participates in the conference.

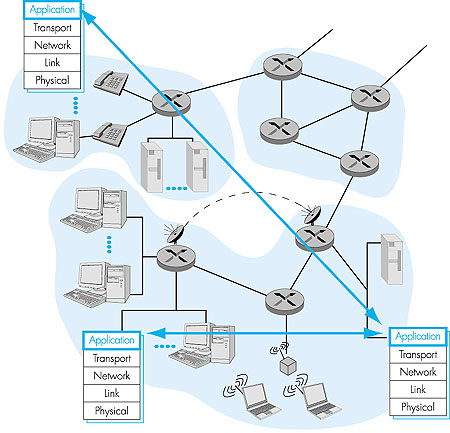

In the jargon of operating systems, it is not actually software pieces (that is, programs) that are communicating but in truth processes that are communicating. A process can be thought of as a program that is running within an end system. When communicating processes are running on the same end system, they communicate with each other using interprocess communication. The rules for interprocess communication are governed by the end system's operating system. But in this book we are not interested in how processes on the same host communicate, but instead in how processes running on different end systems (with potentially different operating systems) communicate. Processes on two different end systems communicate with each other by exchanging messages across the computer network. A sending process creates and sends messages into the network; a receiving process receives these messages and possibly responds by sending messages back, see Figure 2.1. Networking applications have application-layer protocols that define the format and order of the messages exchanged between processes, as well as define the actions taken on the transmission or receipt of a message.

Figure 2.1: Communicating applications

The application layer is a particularly good place to start our study of protocols. It's familiar ground. We're acquainted with many of the applications that rely on the protocols we will study. It will give us a good feel for what protocols are all about and will introduce us to many of the same issues that we'll see again when we study transport, network, and data-link layer protocols.

It is important to distinguish between network applications and application-layer protocols. An application-layer protocol is only one piece (albeit, a big piece) of a network application. Let's look at a couple of examples. The Web is a network application that allows users to obtain "documents" from Web servers on demand. The Web application consists of many components, including a standard for document formats (that is, HTML), Web browsers (for example, Netscape Navigator and Microsoft Internet Explorer), Web servers (for example, Apache, Microsoft, and Netscape servers), and an application-layer protocol. The Web's application-layer protocol, HTTP (the HyperText Transfer Protocol [RFC 2616]), defines how messages are passed between browser and Web server. Thus, HTTP is only one piece of the Web application. As another example, consider the Internet electronic mail application. Internet electronic mail also has many components, including mail servers that house user mailboxes, mail readers that allow users to read and create messages, a standard for defining the structure of an e-mail message and application-layer protocols that define how messages are passed between servers, how messages are passed between servers and mail readers, and how the contents of certain parts of the mail message (for example, a mail message header) are to be interpreted. The principal application-layer protocol for electronic mail is SMTP (Simple Mail Transfer Protocol [RFC 821]). Thus, SMTP is only one piece (albeit, a big piece) of the e-mail application.

Clients and Servers

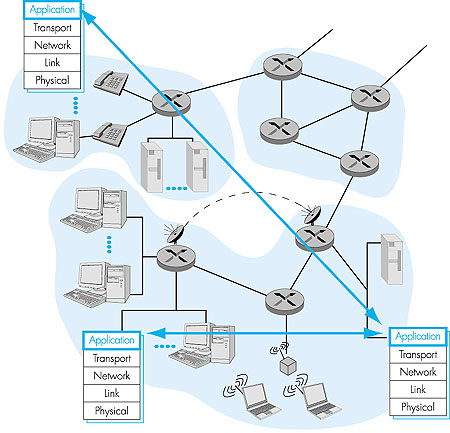

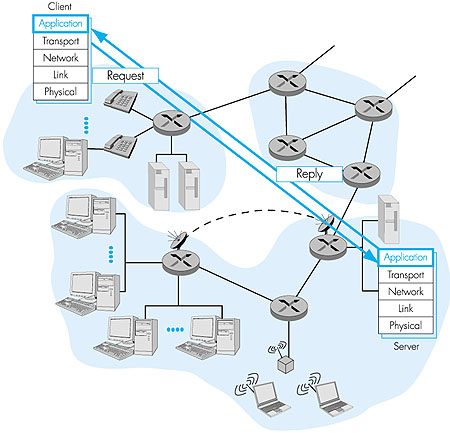

A network application protocol typically has two parts or "sides," a client side and a server side. See Figure 2.2. The client side in one end system communicates with the server side in another end system. For example, a Web browser implements the client side of HTTP, and a Web server implements the server side of HTTP. In e-mail, the sending mail server implements the client side of SMTP, and the receiving mail server implements the server side of SMTP.

Figure 2.2: Client/server interaction

For many applications, a host will implement both the client and server sides of an application. For example, consider a Telnet session between Hosts A and B. (Recall that Telnet is a popular remote login application.) If Host A initiates the Telnet session (so that a user at Host A is logging onto Host B), then Host A runs the client side of the application and Host B runs the server side. On the other hand, if Host B initiates the Telnet session, then Host B runs the client side of the application. FTP, used for transferring files between two hosts, provides another example. When an FTP session exists between two hosts, then either host can transfer a file to the other host during the session. However, as is the case for almost all network applications, the host that initiates the session is labeled the client. Furthermore, a host can actually act as both a client and a server at the same time for a given application. For example, a mail server host runs the client side of SMTP (for sending mail) as well as the server side of SMTP (for receiving mail).

Processes Communicating Across a Network

As noted above, an application involves two processes in two different hosts communicating with each other over a network. (Actually, a multicast application can involve communication among more than two hosts. We shall address this issue in Chapter 4.) The two processes communicate with each other by sending and receiving messages through their sockets. A process's socket can be thought of as the process's door: A process sends messages into, and receives message from, the network through its socket. When a process wants to send a message to another process on another host, it shoves the message out its door. The process assumes that there is a transportation infrastructure on the other side of the door that will transport the message to the door of the destination process.

Figure 2.3 illustrates socket communication between two processes that communicate over the Internet. (Figure 2.3 assumes that the underlying transport protocol is TCP, although the UDP protocol could be used as well in the Internet.) As shown in this figure, a socket is the interface between the application layer and the transport layer within a host. It is also referred to as the API (application programmers' interface) between the application and the network, since the socket is the programming interface with which networked applications are built in the Internet. The application developer has control of everything on the application-layer side of the socket but has little control of the transport-layer side of the socket. The only control that the application developer has on the transport-layer side is (1) the choice of transport protocol and (2) perhaps the ability to fix a few transport-layer parameters such as maximum buffer and maximum segment sizes. Once the application developer chooses a transport protocol (if a choice is available), the application is built using the transport layer of the services offered by that protocol. We will explore sockets in some detail in Sections 2.6 and 2.7.

Figure 2.3: Application processes, sockets, and the underlying transport protocol

Addressing Processes

In order for a process on one host to send a message to a process on another host, the sending process must identify the receiving process. To identify the receiving process, one must typically specify two pieces of information: (1) the name or address of the host machine, and (2) an identifier that specifies the identity of the receiving process on the destination host.

Let us first consider host addresses. In Internet applications, the destination host is specified by its IP address. We will discuss IP addresses in great detail in Chapter 4. For now, it suffices to know that the IP address is a 32-bit quantity that uniquely identifies the end system (more precisely, it uniquely identifies the interface that connects that host to the Internet). Since the IP address of any end system connected to the public Internet must be globally unique, the assignment of IP addresses must be carefully managed, as discussed in Section 4.4. ATM networks have a different addressing standard. The ITU-T has specified telephone number-like addresses, called E.164 addresses [ITU 1997], for use in public ATM networks.

In addition to knowing the address of the end system to which a message is destined, a sending application must also specify information that will allow the receiving end system to direct the message to the appropriate process on that system. A receive-side port number serves this purpose in the Internet. Popular application-layer protocols have been assigned specific port numbers. For example, a Web server process (that uses the HTTP protocol) is identified by port number 80. A mail server (using the SMTP) protocol is identified by port number 25. A list of well-known port numbers for all Internet standard protocols can be found in RFC 1700. When a developer creates a new network application, the application must be assigned a new port number.

User Agents

Before we begin a more detailed study of application-layer protocols, it is useful to discuss the notion of a user agent. The user agent is an interface between the user and the network application. For example, consider the Web. For this application, the user agent is a browser such as Netscape Navigator or Microsoft Internet Explorer. The browser allows a user to view Web pages, to navigate in the Web, to provide input to forms, to interact with Java applets, and so on. The browser also implements the client side of the HTTP protocol. Thus, when activated, the browser is a process that, along with providing an interface to the user, sends/receives messages via a socket. As another example, consider the electronic mail application. In this case, the user agent is a "mail reader" that allows a user to compose and read messages. Many companies market mail readers (for example, Eudora, Netscape Messenger, Microsoft Outlook) with a graphical user interface that can run on PCs, Macs, and workstations. Mail readers running on PCs also implement the client side of application-layer protocols; typically they implement the client side of SMTP for sending mail and the client side of a mail retrieval protocol, such as POP3 or IMAP (see Section 2.4), for receiving mail.

Recall that a socket is the interface between the application process and the transport protocol. The application at the sending side sends messages through the door. At the other side of the door, the transport protocol has the responsibility of moving the messages across the network to the door at the receiving process. Many networks, including the Internet, provide more than one transport protocol. When you develop an application, you must choose one of the available transport protocols. How do you make this choice? Most likely, you will study the services that are provided by the available transport protocols, and you will pick the protocol with the services that best match the needs of your application. The situation is similar to choosing either train or airplane transport for travel between two cities (say, New York and Boston). You have to choose one or the other, and each transport mode offers different services. (For example, the train offers downtown pick up and drop off, whereas the plane offers shorter transport time.)

What services might a network application need from a transport protocol? We can broadly classify an application's service requirements along three dimensions: data loss, bandwidth, and timing.

Some applications, such as electronic mail, file transfer, remote host access, Web document transfers, and financial applications require fully reliable data transfer, that is, no data loss. In particular, a loss of file data, or data in a financial transaction, can have devastating consequences (in the latter case, for either the bank or the customer!). Other loss-tolerant applications, most notably multimedia applications such as real-time audio/video or stored audio/video, can tolerate some amount of data loss. In these latter applications, lost data might result in a small glitch in the played-out audio/video--not a crucial impairment. The effects of such loss on application quality, and actual amount of tolerable packet loss, will depend strongly on the application and the coding scheme used.

Some applications must be able to transmit data at a certain rate in order to be effective. For example, if an Internet telephony application encodes voice at 32 Kbps, then it must be able to send data into the network and have data delivered to the receiving application at this rate. If this amount of bandwidth is not available, the application needs to encode at a different rate (and receive enough bandwidth to sustain this different coding rate) or it should give up, since receiving half of the needed bandwidth is of no use to such a bandwidth-sensitive application. Many current multimedia applications are bandwidth sensitive, but future multimedia applications may use adaptive coding techniques to encode at a rate that matches the currently available bandwidth. While bandwidth-sensitive applications require a given amount of bandwidth, elastic applications can make use of as much or as little bandwidth as happens to be available. Electronic mail, file transfer, remote access, and Web transfers are all elastic applications. Of course, the more bandwidth, the better. There's an adage that says that one cannot be too rich, too thin, or have too much bandwidth.

The final service requirement is that of timing. Interactive real-time applications, such as Internet telephony, virtual environments, teleconferencing, and multiplayer games require tight timing constraints on data delivery in order to be effective. For example, many of these applications require that end-to-end delays be on the order of a few hundred milliseconds or less. (See Chapter 6, [Gauthier 1999; Ramjee 1994].) Long delays in Internet telephony, for example, tend to result in unnatural pauses in the conversation; in a multiplayer game or virtual interactive environment, a long delay between taking an action and seeing the response from the environment (for example, from another player at the end of an end-to-end connection) makes the application feel less realistic. For non-real-time applications, lower delay is always preferable to higher delay, but no tight constraint is placed on the end-to-end delays.

Figure 2.4 summarizes the reliability, bandwidth, and timing requirements of some popular and emerging Internet applications. Figure 2.4 outlines only a few of the key requirements of a few of the more popular Internet applications. Our goal here is not to provide a complete classification, but simply to identify some of the most important axes along which network application requirements can be classified.

| Application | Data loss | Bandwidth | Time sensitive |

| File transfer | No Loss | Elastic | No |

| No Loss | Elastic | No | |

| Web Documents | No Loss | Elastic (few Kbps) | No |

| Real-time Audio/Video | Loss-tolerant | Audio: Few Kbps - 1Mb Video: 10Kb - 5 Mb | Yes: 100s of Msec |

| Stored Audio/Video | Loss-tolerant | Same as Above | Yes: Few Seconds |

| Interactive games | Loss-tolerant | Few Kbps - 10Kb | Yes: 100s Msec |

| Financial Applications | No Loss | Elastic | Yes and No |

Figure 2.4: Requirements of selected network applications

The Internet (and, more generally, TCP/IP networks) makes available two transport protocols to applications, namely, UDP (User Datagram Protocol) and TCP (Transmission Control Protocol). When a developer creates a new application for the Internet, one of the first decisions that the developer must make is whether to use UDP or TCP. Each of these protocols offers a different service model to the invoking applications.

TCP Services

The TCP service model includes a connection-oriented service and a reliable data transfer service. When an application invokes TCP for its transport protocol, the application receives both of these services from TCP.

Connection-oriented service: TCP has the client and server exchange transport-layer control information with each other before the application-level messages begin to flow. This so-called handshaking procedure alerts the client and server, allowing them to prepare for an onslaught of packets. After the handshaking phase, a TCP connection is said to exist between the sockets of the two processes. The connection is a full-duplex connection in that the two processes can send messages to each other over the connection at the same time. When the application is finished sending messages, it must tear down the connection. The service is referred to as a "connection-oriented" service rather than a "connection" service (or a "virtual circuit" service), because the two processes are connected in a very loose manner. In Chapter 3 we will discuss connection-oriented service in detail and examine how it is implemented.

Reliable transport service: The communicating processes can rely on TCP to deliver all data sent without error and in the proper order. When one side of the application passes a stream of bytes into a socket, it can count on TCP to de -liver the same stream of data to the receiving socket, with no missing or duplicate bytes.

TCP also includes a congestion-control mechanism, a service for the general welfare of the Internet rather than for the direct benefit of the communicating processes. The TCP congestion-control mechanism throttles a process (client or server) when the network is congested. In particular, as we will see in Chapter 3, TCP congestion control attempts to limit each TCP connection to its fair share of network bandwidth.

The throttling of the transmission rate can have a very harmful effect on real-time audio and video applications that have a minimum required bandwidth constraint. Moreover, real-time applications are loss-tolerant and do not need a fully reliable transport service. For these reasons, developers of real-time applications usually run their applications over UDP rather than TCP.

Having outlined the services provided by TCP, let us say a few words about the services that TCP does not provide. First, TCP does not guarantee a minimum transmission rate. In particular, a sending process is not permitted to transmit at any rate it pleases; instead the sending rate is regulated by TCP congestion control, which may force the sender to send at a low average rate. Second, TCP does not provide any delay guarantees. In particular, when a sending process passes data into a TCP socket, the data will eventually arrive at the receiving socket, but TCP guarantees absolutely no limit on how long the data may take to get there. As many of us have experienced with the world-wide wait, one can sometimes wait tens of seconds or even minutes for TCP to deliver a message (containing, for example, an HTML file) from Web server to Web client. In summary, TCP guarantees delivery of all data, but provides no guarantees on the rate of delivery or on the delays experienced.

UDP Services

UDP is a no-frills, lightweight transport protocol with a minimalist service model. UDP is connectionless, so there is no handshaking before the two processes start to communicate. UDP provides an unreliable data transfer service; that is, when a process sends a message into a UDP socket, UDP provides no guarantee that the message will ever reach the receiving socket. Furthermore, messages that do arrive to the receiving socket may arrive out of order.

On the other hand, UDP does not include a congestion-control mechanism, so a sending process can pump data into a UDP socket at any rate it pleases. Although all the data may not make it to the receiving socket, a large fraction of the data may arrive. Developers of real-time applications often choose to run their applications over UDP. Similar to TCP, UDP provides no guarantee on delay.

Figure 2.5 indicates the transport protocols used by some popular Internet applications. We see that e-mail, remote terminal access, the Web, and file transfer all use TCP. These applications have chosen TCP primarily because TCP provides the reliable data transfer service, guaranteeing that all data will eventually get to its destination. We also see that Internet telephony typically runs over UDP. Each side of an Internet phone application needs to send data across the network at some minimum rate (see Figure 2.4); this is more likely to be possible with UDP than with TCP. Also, Internet phone applications are loss-tolerant, so they do not need the reliable data transfer service provided by TCP.

| Applications | Application-layer Protocol | Underlying Transport Protocol |

| Electronic Mail | SMTP [RFC 821] | TCP |

| Remote Terminal Access | Telnet [RFC 854] | TCP |

| Web | HTTP [RFC 2068] | TCP |

| File Transfer | FTP [RFC 959] | TCP |

| Remote File Server | NFS [McKusik 1996] | UDP or TCP |

| Streaming Multimedia | Proprietary (for example, Real Networks) | UDP or TCP |

| Internet Telephony | Proprietary (for example, Vocaltec) | Typically UDP |

Figure 2.5: Popular Internet applications, their application-layer protocols, and their underlying transport protocols

As noted earlier, neither TCP nor UDP offer timing guarantees. Does this mean that time-sensitive applications cannot run in today's Internet? The answer is clearly no--the Internet has been hosting time-sensitive applications for many years. These applications often work pretty well because they have been designed to cope, to the greatest extent possible, with this lack of guarantee. We will investigate several of these design tricks in Chapter 6. Nevertheless, clever design has its limitations when delay is excessive, as is often the case in the public Internet. In summary, today's Internet can often provide satisfactory service to time-sensitive applications, but it cannot provide any timing or bandwidth guarantees. In Chapter 6, we will also discuss emerging Internet service models that provide new services, including guaranteed delay service for time-sensitive applications.

| © 2000-2001 by Addison Wesley Longman A division of Pearson Education |